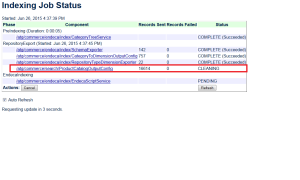

Possible symptoms in log:

/atg/commerce/endeca/index/ProductCatalogSimpleIndexingAdmin

---java.lang.RuntimeException:

org.apache.commons.httpclient.ConnectTimeoutException:

The host did not accept the connection within timeout of 30000 ms

There might be a problem with the connectivity between ATG and Endeca CAS – possibly a configuration issue or networking issue.

Check:

1. Check CASHostName and CASPort properties set properly in below components

Also modify atg/endeca/index/IndexingApplicationConfiguration component (in ATG ).

2. Ping target Endeca CAS server from the command line on the ATG box to verify that it is accessible on the network.

3. Make sure DNS resolution isn’t mis-configured such that the CAS hostname is resolving to an IP address of some server other than the actual CAS server.

The host did not accept the connection within timeout of 30000 ms

There might be a problem with the connectivity between ATG and Endeca CAS – possibly a configuration issue or networking issue.

Check:

1. Check CASHostName and CASPort properties set properly in below components

| /atg/endeca/index/SchemaDocumentSubmitter |

| /atg/endeca/index/DataDocumentSubmitter |

| /atg/endeca/index/DimensionDocumentSubmitter |

Also modify atg/endeca/index/IndexingApplicationConfiguration component (in ATG ).

2. Ping target Endeca CAS server from the command line on the ATG box to verify that it is accessible on the network.

3. Make sure DNS resolution isn’t mis-configured such that the CAS hostname is resolving to an IP address of some server other than the actual CAS server.